RNN and Hierarchical Attention Network

In this posts, we will try to get familiar with RNN, self-attention and HAN architectures. Data exists in many types such as tabular, imgaes, graph, texts etc. Sequential data is data arranged in an ordered sequence. It could be ordered by time, e.g time series, or by position, e.g text. generally, we model the sequence as follows: \((x_{1}, x_{2}, …, x_{T})\), where \((x_{i})\) could be a word in case of a text, a real value in case of a time series etc… .

Recurrent Neural Network are very suitable to work with sequential data and make prediction using this kind of data. In this posts, we will Talk about RNNs and some of its variants, GRU and BiGRU and HAN architecture which uses self attention with BidirGRU. Also, we will see an application of HAN to classify IMDB movie reviews. Other interesting kind of RNN such as LSTM will be covered later in another post.

Recurrent Neural Network - RNN

An RNN takes as input a sequence \((x_{1}, x_{2}, …, x_{T})\) and outputs a sequence of hidden states (or representations) \((h_{1}, h_{2}, …, h_{T})\) which are often called annnotations. At each time step \(t\), the RNN unit takes as input \(x_{t}\) and the previous hidden state \(h_{t-1}\) to compute the hidden state as follows:

\[h_{t} = \sigma (U x_{t} + W h_{t-1} + b)\]The hidden representations are used then to make a prediction. This makes RNNs relays only on past information and no future information are used:

\[y_{t} = \sigma (W_{y} h_{t} + b')\]RNNs can process input of any size and the model size remains independent of the input size as the weight are share across time. But they are computationally slow and they have difficulties to learn long term dependancies. So, they suffers from vanishing/exploding gradient because of multiplicative gradient that can be exponentially decreasing or increasing with respect to the number of layers.

Gated Recurrent Unit - GRU

GRU are an RNN that deals with vanishing gradient problem through using specific gates. In GRU we have two gates, reset (relevance) gate and update gate, defined as follows:

\begin{equation}r_{t} = \sigma (U_{r} x_{t} + W_{r} h_{t-1} + b_{r})\end{equation}

\begin{equation}z_{t} = \sigma (U_{z} x_{t} + W_{z} h_{t-1} + b_{z})\end{equation}

The reset gate determines how much information should be discarder from previous time steps stored in \(h_{t-1}\).

So we compute a candidate hidden state using this reset gates as follows :

\[\hat{h}_t = tanh(U_{h} x_{t} + W_{h}(r_{t} \circ h_{t-1}) + b_{h})\]This candidates hidden states is used along with the previous hidden states to obtain the final hidden state by linearly interpolating them using the update gates:

\[h_t= (1 - z_{t}) \circ h_{t-1} + z_{t} \circ \hat{h}_t\]Bidirectional GRU

Bidirectional GRU is GRU variant that consider hidden state from previous and future steps to predict the current hidden state:

\[h_t^{+}= gru(x_{t}; h_{t+1})\] \[h_t^{-}= gru(x_{t}; h_{t-1})\] \[h_t= h_t^{-} \circ h_t^{+}\]Bidirectional GRU can be implemented easily using Pytorch

import torch

gru = nn.GRU(

input_size=d, # The size of the input

hidden_size=n_units, # The size of the hidden state

num_layers=1,

bias=True,

batch_first=True,

bidirectional=True,

)

The bidirectional argument is set to True to specify a biderctional GRU. If it is set to False then we have normal GRU

Self Attention

The attention mechanism was developed in encoder-decoder architecture for NMT context and then used for other context. The attention also used in encoder only settings and it is called self or inner attention. Self attention is the core component of transformers.

The idea behind attention is to use for prediction a weighted sum, where all the weights are determined using trainable parameters, of the all hidden states \((h_{1}, h_{2}, …, h_{T})\) rather than using the last hidden state which is prone to information loss.

The hidden states are passed to a dense layer (eq 3). The alignment coefficient are computed by comparing the output of the dense layer with a trainable context vector u and normalized using Softmax (eq 4). The attentional vector is computed then using a weighted sum of hidden states with alignment coefficient as weights (eq 5).

\begin{equation}u_t = tanh(W h_t) \end{equation} \begin{equation}\alpha_t = \frac{exp(u_{t}^{T}u)}{\sum_{t’=1}^{T}exp(u_{t’}^{T}u)} \end{equation} \begin{equation}s = \sum_{t=1}^{T}\alpha_{t}h_{t}\end{equation}

The self attention can be implemented as follows using Pytorch

import torch

from torch import nn

from torch.utils.data import DataLoader

class AttentionWithContext(nn.Module):

"""

Class implementing self attention mechanism:

u_t = tanh(W . x_t)

a_t = (u_t^T . u)/sum(u_i^T . u)

s = sum(a_t * x_t)

"""

def __init__(self, input_shape, return_coefficients=False, bias=True):

super(AttentionWithContext, self).__init__()

self.return_coefficients = return_coefficients

# Dense Layer W

self.W = nn.Linear(input_shape, input_shape, bias=bias)

self.tanh = nn.Tanh()

# Trainable context vector u

self.u = nn.Linear(input_shape, 1, bias=False)

self.init_weights()

def init_weights(self):

initrange = 0.1

self.W.weight.data.uniform_(-initrange, initrange)

self.W.bias.data.uniform_(-initrange, initrange)

self.u.weight.data.uniform_(-initrange, initrange)

def forward(self, h):

# compute uit = tanh(W . h) where h are the hidden states

uit = self.W(h)

uit = self.tanh(uit) #(N, L, d) --> (N, L, d)

# compute the attention coefficient alphas : u_t^T . u

ait = self.u(uit) #(N, L, 1) --> (N, L, 1)

#Normalizing with softmax

a = torch.exp(ait)

# in some cases especially in the early stages of training the sum may be almost zero

# and this results in NaN's. A workaround is to add a very small positive number ε to the sum.

eps = 1e-9

a = a / (torch.sum(a, axis=1, keepdim=True) + eps) #(N, L, 1) --> (N, L, 1)

# Compute the attentional vector : s = sum(a_t * x_t)

weighted_input = torch.sum(torch.mul(a, h), axis=1)

if self.return_coefficients:

return (

weighted_input,

a,

) ### [attentional vector, coefficients] ### use torch.sum to compute s

else:

return weighted_input ### attentional vector only ###

The attention mechanism can provide similar summation weights for all the hidden states. A penalization term was proposed by [9] to encourage the diversity of summation weight vectors. The penalization term is as follows:

\[P = {\lVert (AA^{T} - I) \rVert}_{F}^{2}\]where F refer to the frobenius norm of a matrix \({\lVert A \rVert}_{F}^{2} = \sum_{i=1}^{n}\sum_{j=1}^{n} a_{ij}^{2}\)

def Frobenius(mat):

size = mat.size()

if len(size) == 3: # batched matrix

ret = torch.sum(torch.sum((mat ** 2), 2), 1).squeeze() + 1e-10

return torch.sum(ret) / size[0]

else:

raise Exception('matrix for computing Frobenius norm should be with 3 dims')

# Create a batch of indentity matrices of size L where L represent tha length of the sequence.

I = Variable(torch.zeros(batch_size, L, L))

for i in range(batch_size):

for j in range(L):

I.data[i][j][j] = 1

#Computing the penalization term

A_T = torch.transpose(A, 1, 2).contiguous()

P = Frobenius(torch.bmm(A, A_T) - I[:A.size(0)])

Hierarchical Attention Network - HAN

Hierarchical Attention Network is an interesting architecture which use self attention and was proposed by [5]. The architecture contains many level and each level is RNNs followed by self attention layer. Which makes this architecture suitable for data that has a hierarchy e.g word —> sentence —> document. First, a sentence encoder produces an embedding for each sentence from word embeddings; then a document encoder produces the document embedding vector from the sentence embeddings previously produced. Each encoder is a Bidir GRU followed by a self attention.

HAN makes sense for two reason: First it matches the natural hierarchy of a document; second; it allows the model to first determine which words are important in each sentence and then which sentence are important overall.

By being able to re-weight the word attentional coefficients by the sentence attentional coefficients the model captures the fact that a word may be very important in a sentence but it’s less important in another sentence.

class AttentionBiGRU(nn.Module):

def __init__(self, input_shape, n_units, index_to_word, dropout=0):

super(AttentionBiGRU, self).__init__()

self.embedding = nn.Embedding(

len(index_to_word) + 2, # vocab size

d, # dimensionality of embedding space

padding_idx=0,

)

self.dropout = nn.Dropout(drop_rate)

self.gru = nn.GRU(

input_size=d,

hidden_size=n_units,

num_layers=1,

bias=True,

batch_first=True,

bidirectional=True,

)

self.attention = AttentionWithContext(

2 * n_units, # the input shape for the attention layer

return_coefficients=True,

)

def forward(self, sent_ints):

sent_wv = self.embedding(sent_ints)

sent_wv_dr = self.dropout(sent_wv)

sent_wa, _ = self.gru(sent_wv_dr) # GRU layer

sent_att_vec, word_att_coeffs = self.attention(

sent_wa

) # attentional vector for the sent

sent_att_vec_dr = self.dropout(sent_att_vec)

return sent_att_vec_dr, word_att_coeffs

class TimeDistributed(nn.Module):

def __init__(self, module, batch_first=False):

super(TimeDistributed, self).__init__()

self.module = module

self.batch_first = batch_first

def forward(self, x):

if len(x.size()) <= 2:

return self.module(x)

# Squash samples and timesteps into a single axis

x_reshape = x.contiguous().view(

-1, x.size(-1)

) # (samples * timesteps, input_size) (224, 30)

sent_att_vec_dr, word_att_coeffs = self.module(x_reshape)

# We have to reshape the output

if self.batch_first:

sent_att_vec_dr = sent_att_vec_dr.contiguous().view(

x.size(0), -1, sent_att_vec_dr.size(-1)

) # (samples, timesteps, output_size)

word_att_coeffs = word_att_coeffs.contiguous().view(

x.size(0), -1, word_att_coeffs.size(-1)

) # (samples, timesteps, output_size)

else:

sent_att_vec_dr = sent_att_vec_dr.view(

-1, x.size(1), sent_att_vec_dr.size(-1)

) # (timesteps, samples, output_size)

word_att_coeffs = word_att_coeffs.view(

-1, x.size(1), word_att_coeffs.size(-1)

) # (timesteps, samples, output_size)

return sent_att_vec_dr, word_att_coeffs

class HAN(nn.Module):

def __init__(self, input_shape, n_units, index_to_word, dropout=0):

super(HAN, self).__init__()

self.encoder = AttentionBiGRU(input_shape, n_units, index_to_word, dropout)

self.timeDistributed = TimeDistributed(self.encoder, True)

self.dropout = nn.Dropout(drop_rate)

self.gru = nn.GRU(

input_size=2 * n_units, # the input shape of GRU layer

hidden_size=n_units,

num_layers=1,

bias=True,

batch_first=True,

bidirectional=True,

)

self.attention = AttentionWithContext(

2

* n_units, # the input shape of between-sentence attention layer

return_coefficients=True,

)

self.lin_out = nn.Linear(

2 * n_units, 1 # the input size of the last linear layer

)

self.preds = nn.Sigmoid()

def forward(self, doc_ints):

sent_att_vecs_dr, word_att_coeffs = self.timeDistributed(

doc_ints

) # get sentence representation

doc_sa, _ = self.gru(sent_att_vecs_dr)

doc_att_vec, sent_att_coeffs = self.attention(doc_sa)

doc_att_vec_dr = self.dropout(doc_att_vec)

doc_att_vec_dr = self.lin_out(doc_att_vec_dr)

return self.preds(doc_att_vec_dr), word_att_coeffs, sent_att_coeffs

While HAN is an interesting architecture, it has a major limitation. For a given sentence, the embedding is produced in isolation. This means, it ignores the other sentences. So, If redundant parts exist in the document; the model can spend the attention budget on them and neglects the others aspects.

Dataset

The dataset considered in this application is IMDB review dataset. It contains reviews about movies and their classes, positive or negative. The goal is to classify these reviews into positive or negative.

Each review is converted to an array of integers that has a size of \((1, doc_size, sent_size)\). the doc{_}size specifies the maximum number of allowed sentences per document and sent{_}size the specifies the maximum number of allowed words per sentence. Smaller sentences are padded by a special padding token and smaller documents are padded with sentences containing only a special padding token. Longer documents or sentences are truncated.

The mapping of a word to an integers is done by creating a vocabulary dictionary from the training set where each word has an integer value. The most frequent word has a value of 2. 0 and 1 are reserved for special token and out of vocabulary token.

An example of a sentence and its mapping to an array of integers:

Sentence:

"There 's a sign on The Lost Highway that says : OOV SPOILERS OOV ( but you already knew that , did n't you ? )"

Corresponding array:

array([ 130, 14, 6, 1991, 28, 22, 2746, 17943, 13,

564, 85, 1, 3225, 1, 25, 26, 29, 488,

697, 13, 3, 84, 27, 29, 45, 24, 0,

0, 0, 0])

We have some 0 in the end of the array as the choosen sent_size is 30 and the sentence needs to be padded because it contains only 26 words.

Training

The training consists of minimizing a loss function. As we are dealing with classification problem, the loss used is Binary Cross Entropy (BCE):

\[L(\hat{y}, y) =\frac{1}{N} \sum_{i=1}^N y_i log(\hat{y}_i) + (1-y_i)log(1-\hat{y}_i)\]model = HAN(input_size, n_units, index_to_word).to(device)

model = model.double()

lr = 0.001 # learning rate

criterion = (

nn.BCELoss()

) # Binary cross entropy from torch.nn: https://pytorch.org/docs/stable/nn.html#loss-functions

optimizer = torch.optim.Adam(model.parameters(), lr=lr) # Adam optimizer

def train(

x_train=my_docs_array_train,

y_train=my_labels_array_train,

x_test=my_docs_array_test,

y_test=my_labels_array_test,

word_dict=index_to_word,

batch_size=batch_size,

):

train_data = get_loader(x_train, y_train, batch_size)

test_data = get_loader(my_docs_array_test, my_labels_array_test, batch_size)

best_validation_acc = 0.0

p = 0 # patience

for epoch in range(1, nb_epochs + 1):

losses = []

accuracies = []

with tqdm(train_data, unit="batch") as tepoch:

for idx, data in enumerate(tepoch):

tepoch.set_description(f"Epoch {epoch}")

model.train()

optimizer.zero_grad()

input = data["document"].to(device)

label = data["label"].to(device)

label = label.double()

out = model.forward(input)

output = out[0][:, -1]

loss = criterion(output, label) # compute the loss

loss.backward()

torch.nn.utils.clip_grad_norm_(

model.parameters(), 0.5

) # Clipping to prevent exploding gradient

optimizer.step()

losses.append(loss.item())

accuracy = torch.sum(torch.round(output) == label).item() / batch_size

accuracies.append(accuracy)

tepoch.set_postfix(

loss=sum(losses) / len(losses),

accuracy=100.0 * sum(accuracies) / len(accuracies),

)

train_acc = evaluate_accuracy(train_data, False)

test_acc = evaluate_accuracy(test_data, False)

print(

"===> Epoch {} Complete: Avg. Loss: {:.4f}, Validation Accuracy: {:3.2f}%".format(

epoch, sum(losses) / len(losses), 100.0 * test_acc

)

)

if test_acc >= best_validation_acc:

best_validation_acc = test_acc

print("Validation accuracy improved, saving model...")

torch.save(model.state_dict(), "./best_model.pt")

p = 0

print()

else:

p += 1

if p == my_patience:

print(

"Validation accuracy did not improve for {} epochs, stopping training...".format(

my_patience

)

)

print("Loading best checkpoint...")

model.load_state_dict(torch.load("./best_model.pt"))

model.eval()

print("done.")

train()

Analysis

Using the mean of the hidden state of GRU instead attention will lead to treating the hidden state in a sequence equally. This lead to the same contribution of irrelevant elements to the prediction with the same importance as relevant element. Which can be subject to poor performances. So, the advantage of the attention mechanism is to have weights releaving the importance of each hidden state; and so each element, in the sequence (words in case of sentence, and sentences in case of a document).

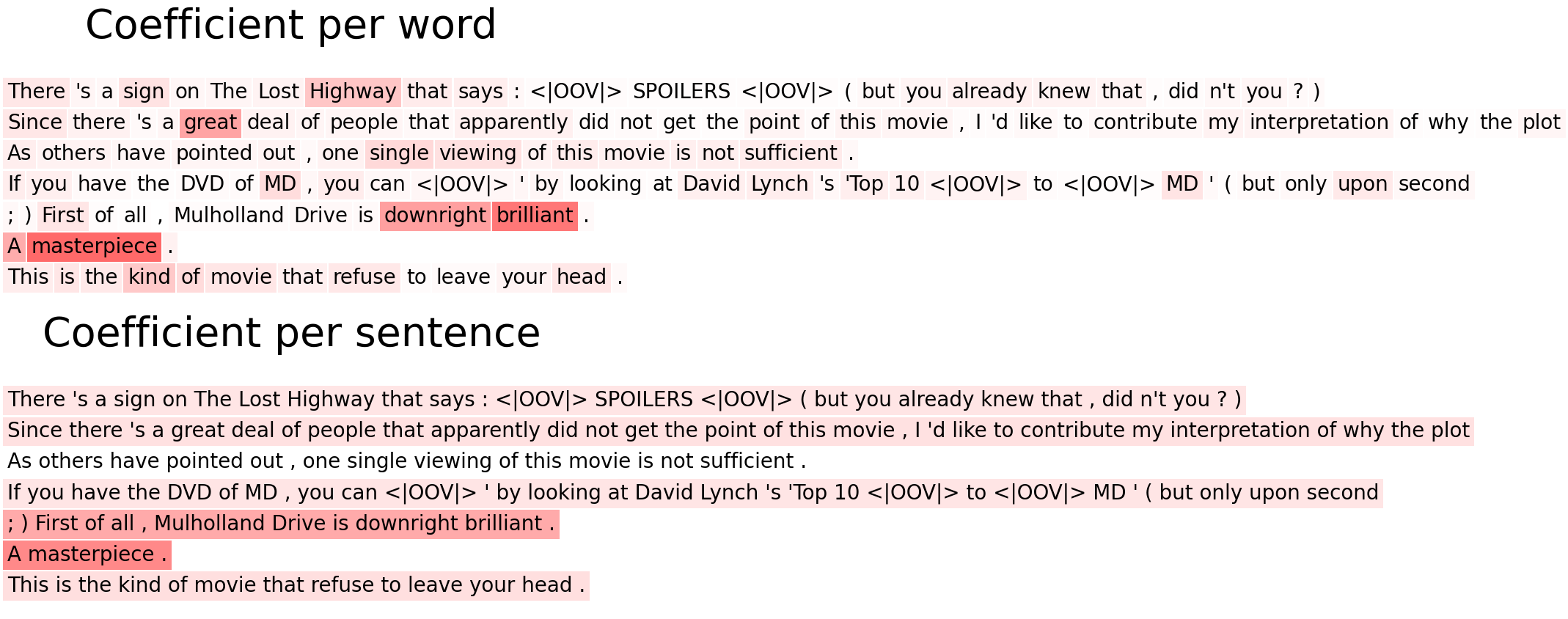

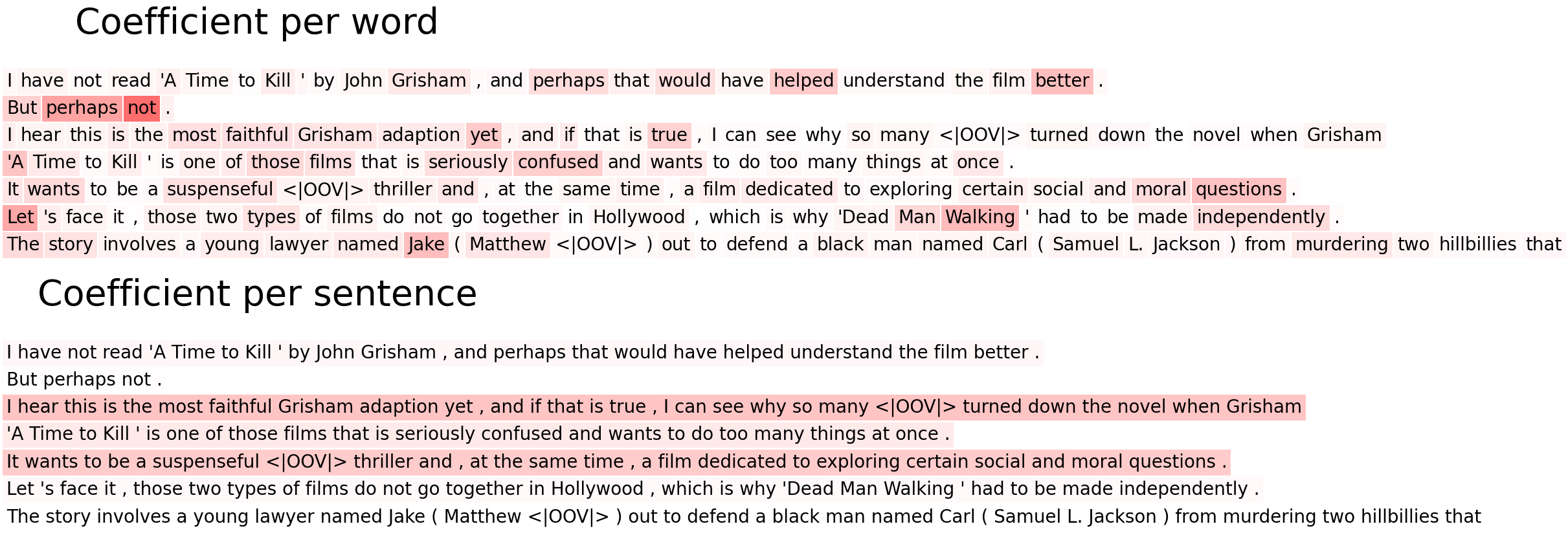

The following plots, shows the attention coefficient per words and per sentences of positive and negative reviews from the test set. The more the sentence or the word is red, the more the attention of this element is high. <|OOV|> means Out Of Vocabulary, is a token to replace words that are not in the training vocabulary.

Example of positive review :

Example of Negative review :

From the plots, we can see that words and sentence that relevant and more linked to a positive or negative reviews have a high attention coefficient regarding the other words. For example in the positive review, we have words such as “Brilliant”, “Master Piece”, “Great” which are positive and have a high attention. Same remark for sentences such as “:) First of all, Mulholland Drive is downright brilliant.” or “A masterpiece”. In the negative review we have words or sentences with a negative tonality have a high attention; such as “confused” “suspenful”.